Key points

- Image detection and recognition in wheat and sorghum can be used to indicate plant health, maturity or in-paddock variability

- It involves the automatic counting of wheat and sorghum heads in photos and videos

- The University of Queensland has been working to improve image detection using artificial intelligence, global image datasets, and testing real-time machine learning cameras that could be used on vehicles and drones

The image-manipulation technology that created the ‘deepfake’ photo of actor Tom Cruise that went viral on social media in early 2021 might seem light years from the technology that grains researchers are interested in.

However, the University of Queensland’s Professor Scott Chapman says image detection research in wheat and sorghum can also benefit from this form of deep learning and artificial intelligence that merges images to create fake events or scenes.

The professor in crop physiology, along with University of Queensland data scientists Dr Yanyang Gu and Chrisbin James, has been supported by GRDC to improve image detection in wheat and sorghum.

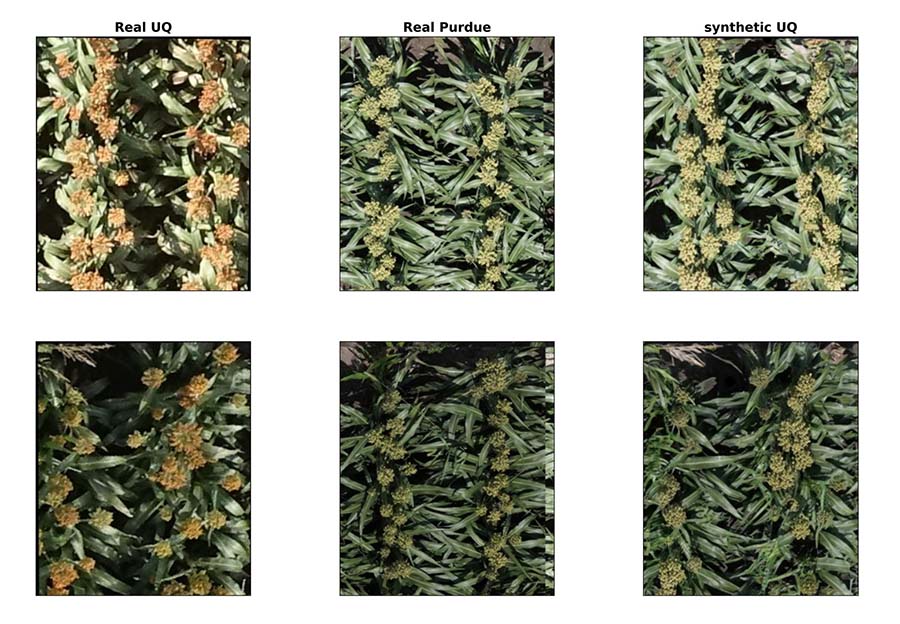

An example of how GAN technology can be used to combine images. Photo: Courtesy Scott Chapman

The tasks have included using artificial intelligence to build robust deep learning models, contributing to a global image dataset and testing real-time machine learning cameras that could be mounted on vehicles and drones.

Where’s Wally?

Image recognition and detection is a modern-day Where’s Wally? methodology. It can automatically identify, detect and count objects or features in a digital image or video.

Partnering with researchers from Arvalis in France and the University of Tokyo, Japan, the University of Queensland team applied these methods to count wheat and sorghum heads in images and videos.

Using drones, handheld devices or cameras mounted on booms, images of cereal heads can be further used to indicate plant health, maturity or in-paddock variability.

“However, deep learning models are often not robust,” Professor Chapman says. “A model may work in a certain field or light but is not easily transferable to other situations. So, you may be able to create something that can count the number of wheat heads in Narrabri, but if you brought that same technology to Dalby its error rate could be high.”

This is called domain shift, he says. “The domain is the set of photos you have taken, say at a single date and place. Ideally you want a model that can work with diverse imagery, different lighting conditions, soil background, crop age, head colour, genotype and so on, without collecting additional data.”

One way to train models to be more robust is to collect and label more imagery to improve model performance. Professor Chapman says this can be expensive, time-consuming and impractical, which is where the deepfake technique, called generative adversarial networks (GAN) helps.

Image labelling involves identifying and marking various details in an image. For cereal crops it can require outlining the wheat or sorghum heads with ‘bounding boxes’.

To adapt to a new domain, such as Dalby for example, researchers would normally have to label many more images. GAN offers a shortcut. It can allow the ‘Narrabri’ images to take on the ‘style’ of the ‘Dalby’ images, with the heads staying in the same place.

Professor Chapman says the original labels in synthetic ‘Narrabri/Dalby’ images are retained and these images are fed back into the model to train it to find heads in ‘Dalby’ images.

“When we did this, it reduced counting error in sorghum images from 10 per cent to two per cent, making the model much more robust.”

University of Queensland researcher Professor Scott Chapman. Photo: Dom Jarvis

Data, data, data

As well as using GAN to create more training opportunities, the team helped fund the Global Wheat Head Dataset (GWHD).

It is the first large-scale dataset for wheat head detection from field optical images, with more than 180,000 heads from cultivars from different continents.

In a 2020 competition via Kaggle – an online community of data scientists and machine learning technicians – the GWHD competition attracted more than 2000 teams (Kaggle - Global Wheat Detection).

Professor Chapman says the competition provided new ideas that were tested in the wheat models.

The competition was run again in 2021 with an improved dataset (Global Wheat Challenge 2021) and is being maintained as a public benchmark computing resource at Stanford University.

Future

The final part of this project has demonstrated that deep learning models could be run in real-time on new types of machine learning cameras, which have just become available.

“We are testing these cameras for potential use by growers and consultants who want to count and document heads and damage on heads, or potentially put cameras on tractors or drones to document spatial variability in the field.”

The University of Queensland is discussing commercialisation options with GRDC.

Professor Chapman says the short project has gone well, in part due to engagement with international partners. “One of the key things we set out to do is design a methodology to build robust new counting models to understand spatial variability and for that to be adaptable to different domains.”

Funding for the current project ends in February 2022.

More information: Scott Chapman, scott.chapman@uq.edu.au; Dr Jeff Cumpston, jeff.cumpston@grdc.com.au, 0437 160 693